ST Adds ‘Most Powerful ST MCU To Date’ to Edge AI Portfolio

STMicroelectronics recently added its most powerful microcontroller to date, the STM32N6 series, to its edge AI portfolio. The MCU includes a neural processing unit (NPU) AI accelerator built on ST’s proprietary embedded inference Neural-ART accelerator architecture.

The STM32N6.

An Arm Cortex-M55 with built-in digital signal processing (DSP) capability and 800-MHz operation are at the heart of the new MCUs. The core has Arm Helium vector processing, which adds DSP processing to conventional CPU or MCU processors.

Key Features of the STM32N6

The STM32N6 (datasheet linked) comes in two major variants: the STM32N647xx and STM32N657xx. In both cases, the “xx” refers to packaging options. The chips range in physical size from 14 mm x 14 mm, 264 ball BGA to a 6 mm x 6 mm, 0.4-mm pitch 169-ball BGA part.

Some of the key features of the series include:

- Arm Cortex-M55 core at up to 800 MHz, including 32-Kbyte ICACHE and 32-Kbyte DCACHE

- Arm extensions: MVE (M-Profile vector extension), Helium DSP technology, TrustZone MPU/NVIC, single and half-precision floating point unit

- ST Neural-ART accelerator at up to 1 GHz

- Specialized hardware units for deep neural network (DNN) inference functions

- Flexible dedicated stream processing engine

- Real-time encryption/decryption

- On-the-fly weight decompression

- 4.2 Mbyte SRAM with 128-Kbyte tightly-coupled memory (TCM) for real-time data

The MCU also has built-in graphics processing with:

- Neo-Chrom 2.5D GPU: scaling, rotation, alpha blending, texture mapping, perspective transformation

- Chrom-ART accelerator

- Hardware JPEG and MPEG decoding

- LCD controller with up to XGA resolution

The STM32N65 series also includes hardware crypto functionality, while the STM32N65 series does not.

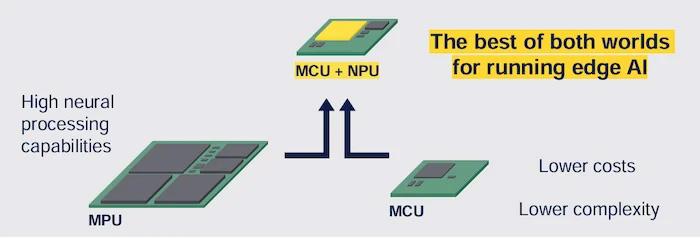

Bringing Neural Processing to Edge AI

The new MCUs feature both DSP and neural processing capabilities. The DSP functionality delivers complex floating point math functionality and analysis that is difficult to find in conventional MCUs. ST’s Neural-ART accelerator, an in-house NPU, adds to the capability with AI-specific functions. It includes nearly 300 configurable multiply-accumulate units achieving up to 600 giga operations per second (GOPS). The result is a 600x machine learning performance improvement over a typical high-end ST MCU.

MPU-like processing in a microcontroller.

The Neural-ART NPU works with the M55 core to provide AI inference-specific functionality. Inference functions allow the NPU to analyze, identify, and make predictions based on large data sets. The 4.2 Mbyte on-chip memory, the largest for any ST MCU, allows large datasets to reside on-chip for faster and more accurate analysis. Dual 64-bit AXI interfaces ensure the memory is accessible for sustained high-bandwidth computation.

In one example ST provides, the MCU can be used for arc fault detection. Arc faults occur without clearly recognizable patterns. Often, the fault occurs without warning signs that conventional circuitry can detect. The system then shuts down to minimize damage. ST's new AI-equipped MCU can gain insight from the circuit’s historical patterns through neural processing and accurately judge the otherwise difficult-to-detect arc faults. Such early detection is critical in high-voltage/high-current circuits. AI-enabled early detection can allow systems to be shut down before damage occurs and to safely recover from fault conditions.

Advanced Capability Without Excessive Resource Requirements

Traditionally, MCUs have been used for simple control and Boolean-type processing. If A, then perform B; if A, C, and D, then E. If a developer needed advanced interpretation or decision-making capabilities, they either had to significantly up the in-device processing capability with bigger, more power-hungry processors or instruct the device to call back to a server.

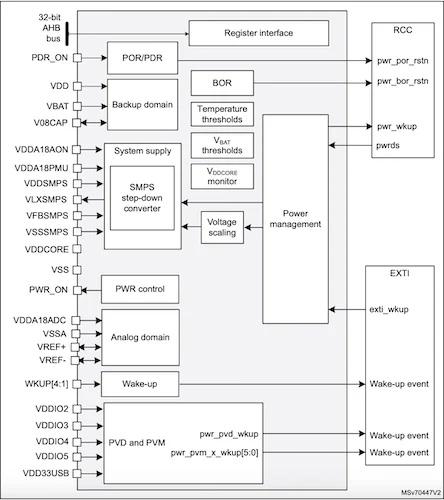

Power supply overview of the STM32N647xx and STM32N657xx.

Because the MCUs have specialized DSP and AI inference math capabilities, they can offer advanced decision-making and control features without “phoning home” or requiring larger batteries and sundry components that come with higher-end processors. With AI in the edge MCUs, devices can operate autonomously or perform more preprocessing before adding to the distant server load and eating communication bandwidth.

Continuing to Push Edge AI Capability

While large language model (LLM) AI server farms are transforming the way the world manages data, edge AI stands to transform our everyday lives. ST designed the new STM32N6 MCU to facilitate that transformation. The MCUs target various applications, including smart homes, smart cities, automobiles, personal electronics, and medical care.